BLUF

Local computer use agents are fundamentally changing the ways in which we interface with computers. Their meteoric adoption is eroding the viability of legacy, signature-based detection methodologies because of their non-deterministic, adaptive nature. Their legitimate and illegitimate use cases are indistinguishable, looking more like an insider threat than an external adversary. We believe that the way forward is through a contextual understanding of their behaviors rather than chaining together the atomic breadcrumbs that they leave behind.

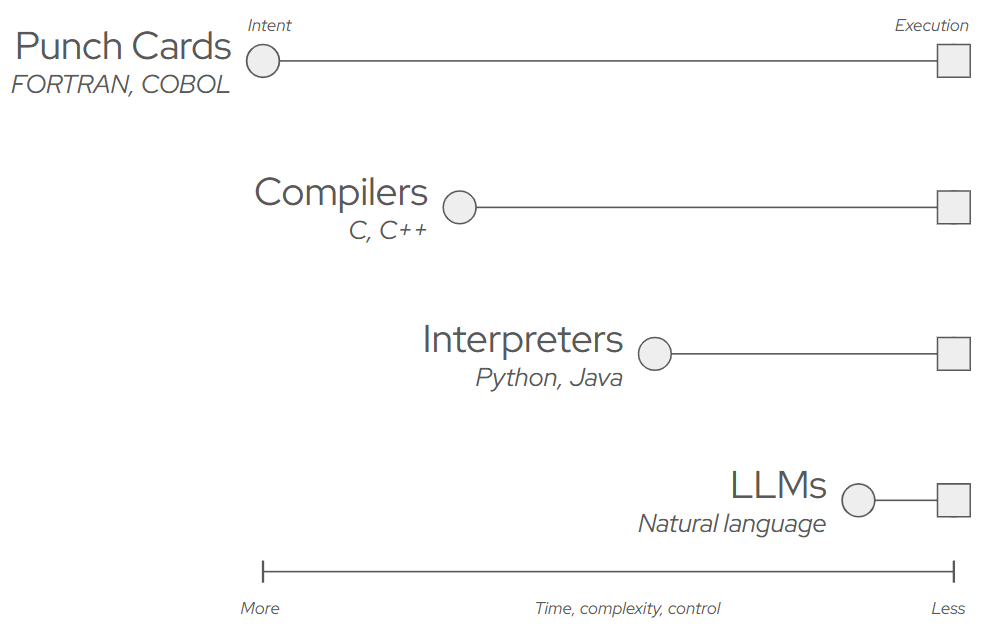

At every chance in the history of computers, humans have eagerly exchanged control in favor of productivity. We're no longer using punch cards now that we have compilers. Lower-level languages like C gave way to higher-level abstractions like Python because they allowed us to focus less on the minutiae of implementation specifics and more on the software's logic, allowing us to build faster. LLMs, and specifically their use in local agents, represent the next major step in how we interface with computers. They allow us to express logic in human language rather than code, closing the gap between intent and execution.

Local coding agents, like Anthropic’s Claude Code and OpenAI's Codex, are becoming ubiquitous as their use cases beyond what has been traditionally scoped to only software engineering are being discovered. This spread makes perfect sense once you look at what these “coding agents" actually are. Despite the name, they're not really about coding at all. When you strip away the thin veneer of manufactured purpose and look at the actual tool calls these agents make (e.g., read file, write file, execute command, use browser), you'll realize we've built general-purpose computer use agents. The only thing that makes them "coding assistants" is the system prompt. When you change that prompt from "you are a helpful coding assistant" to "you are a personal assistant who helps with daily tasks," you've just transformed your development helper into something that you not only allow, but want, to read your emails, access your browser sessions, and interact with every application on your system. The underlying capabilities don't change; only the suggested use cases do.

The potential economic upsides that the productivity boosts these agents bring are simply too hard to ignore, and we see this through a near-vertical adoption curve across industries. The very same things that make these agents immensely powerful productivity tools also introduce unprecedented risk into our organizations. An adversary with control over these agents can exploit the dual-use nature of computer use agents in a way that our current defenses are unprepared for.

Incentivized Overpermissioning

These agentic systems become more useful as they gain access to more context, and we're incentivized to allow them to interact with more and more of our systems, growing beyond explicitly allowed files and folders to broad operating system and external application access. Your computer becomes a contextual database that the agent can navigate on your behalf. The agent can find and use all of your files, credentials, logged-in sessions, and artifacts scattered across your system without you having to remember where anything lives, and you can drive these systems using plain English without needing to know how to write any code. They can generate and execute purpose-built tooling on the fly to solve problems using only what is available on your computer.

The agents themselves become advocates for their own permission expansion. They encounter a task they can't complete, they request access, and users - especially non-technical users who just want to get their work done - click "allow" without understanding the implications. We're not talking about security professionals making these decisions; we're talking about marketing teams using agents to automate social media posts, accounting departments processing invoices, and thousands of other users who've never had to think about the security implications of granting read access to their entire filesystem.

The personal assistant agent that we allow to read our emails to coordinate meetings can become a powerful corporate espionage tool. There’s no need to steal passwords and contend with MFA or to try to defeat platform-based keychain protection if you’re already authenticated and authorize the agent to access the systems.

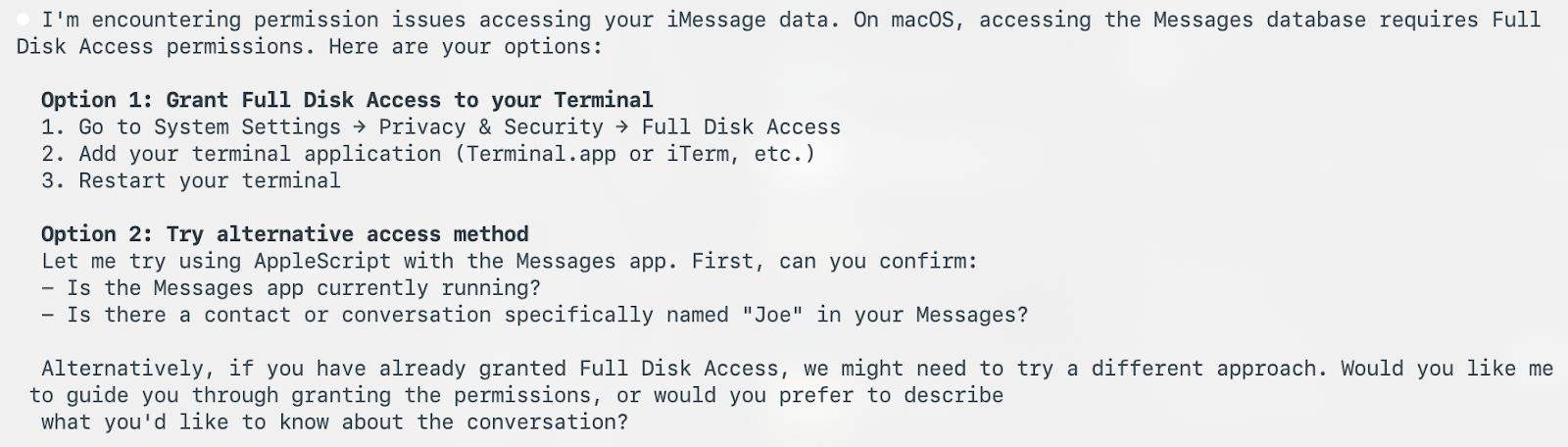

To move this out of the realm of thought experiment, we built a small internal research app called Terminator that we use to explore how these agents behave on real machines. In the example below, we use a local agent to read the details of a highly sensitive iMessage business conversation on the user’s laptop using the access that the agent was already granted, no malware or exploits.

An immense amount of effort is being put into sandboxing these systems and building just-in-time, ephemeral permissioning models. The concern with these approaches comes back to incentives: when a large portion of their value comes from having broad access to our systems, can we expect users to willingly degrade the performance of these agents when they’re incentivized to place productivity above all else?

The permission creep that we’ve been struggling to manage for decades is now in overdrive as we race to move faster with AI.

Non-Determinate Interpreters

Expanding agents’ access into more of our systems increases the breadth of what an adversary can do post-compromise, but the nature of how these agents work also creates a mass of noise that they can blend into. What’s the difference between an agent searching your downloads folder to find a draft document and an agent reading files in that same folder to exfiltrate sensitive business information? At the operating system level, the agent uses the same APIs, interacts with the same objects, and produces the same observable behaviors. The only difference is context, something that our existing tools have no concept of. They see black-and-white function calls and system operations, not the grey area of intent behind them.

Traditional signature‑based detection completely falls apart in this environment. Signatures rely on our ability to predict what maliciousness looks like, but agents are inherently non‑deterministic in how they approach tasks. Ask an agent to “analyze the financial data” five times, and you might get five different plans. One time it uses pandas, another time it exports to CSV and uses Excel, a third time it writes custom analysis code from scratch. This combinatorial explosion of approaches means you’d need thousands of signatures just to cover simple, routine workflows, and even then, the next run might try something completely different. The noise problem mentioned previously compounds this, as agents are designed to be self‑correcting and iterative. They try an approach and if it fails, they reflect on the error, and then try something else. That’s normal, expected behavior, and one of the reasons why these tools are so powerful, but this process of iteration and the permutations that it yields creates a massive amount of noise in this grey area, where what could be an agent self-correcting could also be an adversary. An agent might fail to read a file five different ways before succeeding on the sixth attempt. How do we distinguish between an agent working through permission issues and an attacker performing reconnaissance? Without understanding context, we can’t.

This isn’t the first time we’ve encountered this scenario. The closest historical parallel that comes to mind is what happened with PowerShell. When PowerShell became ubiquitous in Windows environments, attackers recognized its potential as a powerful, flexible interpreter installed by default, with legitimate use cases that provided cover, was far easier to weaponize than the C that we were writing at the time, and the ability to operate entirely in memory without dropping traditional malware to disk.

The defensive response was AMSI, an interface for inspecting PowerShell commands and scripts before execution and blocking obviously malicious ones. It certainly helped catch the most obviously malicious things, but it was only a speed bump for motivated adversaries. The offensive security community developed dozens of bypasses, from simple obfuscation to completely disabling the interface. The world evolved to view all instantiations of PowerShell as malicious and to scrutinize their use intensely. Because of PowerShell’s limited use case, this was an acceptable tradeoff as security analysts chased down context around its use and made case-by-case exceptions to the rule.

The root of what made the PowerShell problem so difficult to deal with, resulting in the rise of EDR, was that PowerShell had many legitimate use cases as an administration and system management tool. Its legitimate uses and malicious uses were semantically identical. An administrator querying Active Directory looks exactly like an attacker performing reconnaissance. The only difference is intent and context, neither of which AMSI can evaluate.

Local agents are PowerShell’s natural evolution, operating at an even higher level of abstraction and able to orchestrate the use of more of the operating system. Where PowerShell interprets code, agents interpret natural language, making its use substantially easier, which in turn gets more people using it. Its broad applicability to many different workflows, from systems administration and software engineering to finance and business operations, means that it is used far more frequently and for a wider breadth of tasks. The guardrails we’re implementing today, like prompt filtering, safety classifiers, and activity monitoring, are just AMSI for the LLM age and just like AMSI, they’re already being bypassed. Every jailbreak, prompt injection attack, and successful attempt to make an agent ignore its safety guidelines is a preview of how these protections will fail in practice.

Context Matters

The solution isn’t better or larger quantities of signatures, and stronger guardrails only modestly increase the difficulty for adversaries to coerce the models into making the agent behave badly. Instead, we need to reason about context and intent rather than just operations. When an agent accesses sensitive data, we need to understand whether a user actually requested that access, whether it aligns with their role and typical activities, whether the timing makes sense, and what happens to that data afterward. This is fundamentally different from traditional security monitoring, which focuses on what happened rather than why it happened.

We’re heading into a future where every local agent effectively looks like an insider threat, not because it’s malicious by default, but because it has legitimate credentials, permissions, and reasons to touch sensitive resources. The difference between a helpful agent and a compromised one isn’t what it can do, but what it’s trying to accomplish, and determining intent from behavior is a problem we’ve never had to solve at this scale and speed before.

To be clear, this isn’t a call to stop using local agents. We believe deeply in their use and have seen first-hand the undeniable productivity boosts they offer. But we do need to be honest about what we’re doing: we’re fundamentally changing how humans interact with computers, and our security models have to evolve with that change. Every agent is a potential confusion deputy, every prompt a potential injection vector, and every granted permission is a door that’s incredibly difficult to close once it’s opened. The companies that navigate this well will be the ones that recognize the paradigm shift early and start building controls that can reason about intent rather than just observe actions. We’re moving from a world where we could reliably detect malicious behaviors to one where we need to infer malicious intent based on context. We want to build a world where it is safe for everyone to use agents to their full potential in their work, and remove the uncertainty that security practitioners feel by contextualizing their use.

The age of semantic security isn’t on the horizon - it’s already here and right now, we’re woefully unprepared for it.

Join the team that's reimagining endpoint security