.jpg)

Security

What Are the Key Risks of Deploying Local AI Agents in Business Environments?

Learn the 4 critical risk categories when deploying AI agents locally vs cloud: data privacy, security, compliance & operations.

Chris Singlemann

Go-to-market

Security

Artificial Intelligence

.jpg)

Endpoint Security

How IT Asset Management Enhances Cyber Security

Learn how IT asset management strengthens cybersecurity through automated discovery, vulnerability prioritization, and compliance.

Pete Constantine

Product

Endpoint Security

Security

Platform

Security

Aligning Technical Security Controls to NIST

Learn how to translate NIST frameworks into practical technical controls. Map CSF 2.0 functions to actionable security implementations.

Chris Singlemann

Go-to-market

Identity Security

Email Security

Endpoint Security

Identity Security

Essential Identity and Access Management Metrics: Definitions, Examples, and Best Practices

Track IAM metrics that matter: MFA coverage, TTDv, JIT access. Get formulas, benchmarks & NIST-aligned targets to measure identity security.

Joe Kaden

Product

Control Monitoring

Identity Security

Endpoint Security

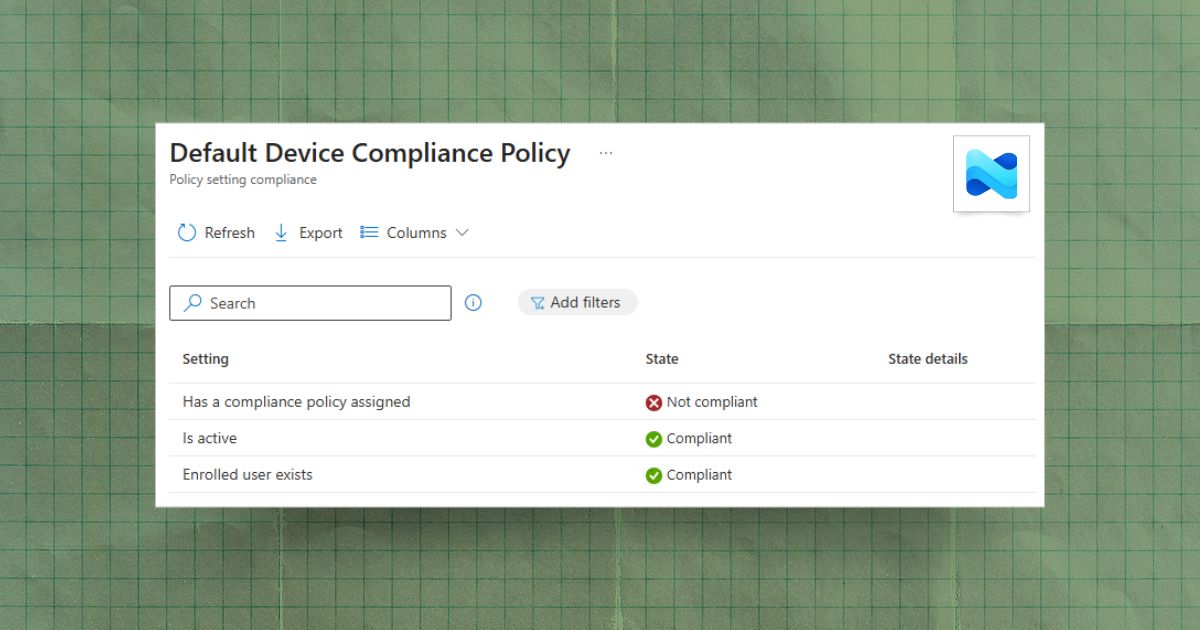

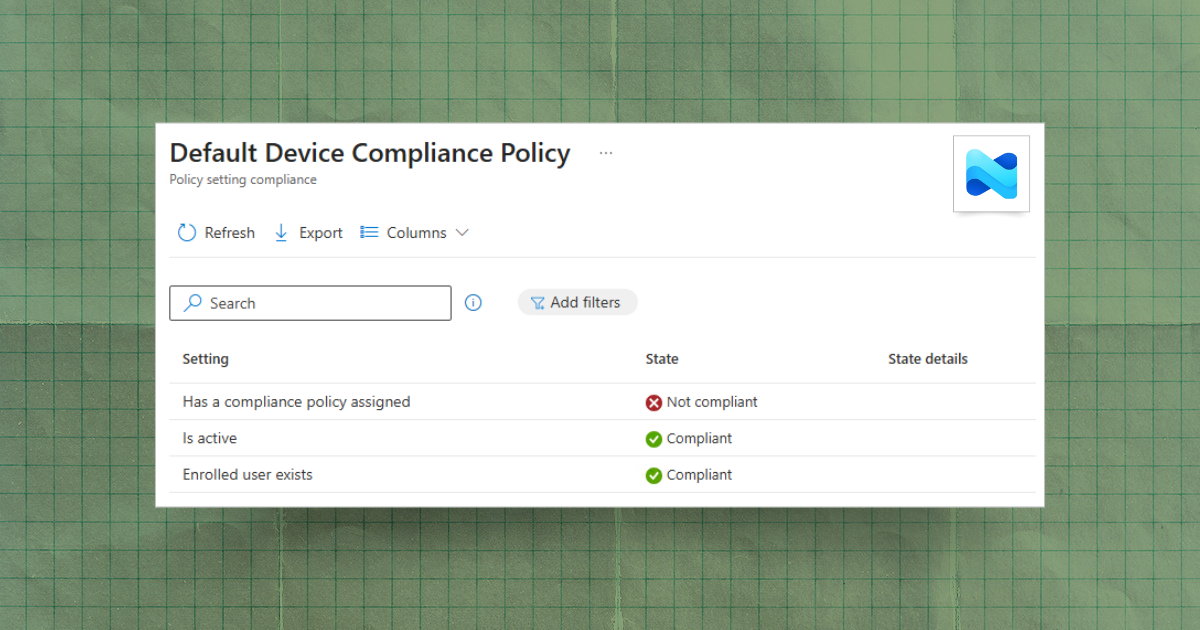

Understanding the Default Device Compliance Policy in Intune

Understand Intune's built-in default device compliance policy, the three checks it enforces, and how to remediate common noncompliant statuses.

Pete Constantine

Product

Microsoft

Control Monitoring

Endpoint Security

Endpoint Security

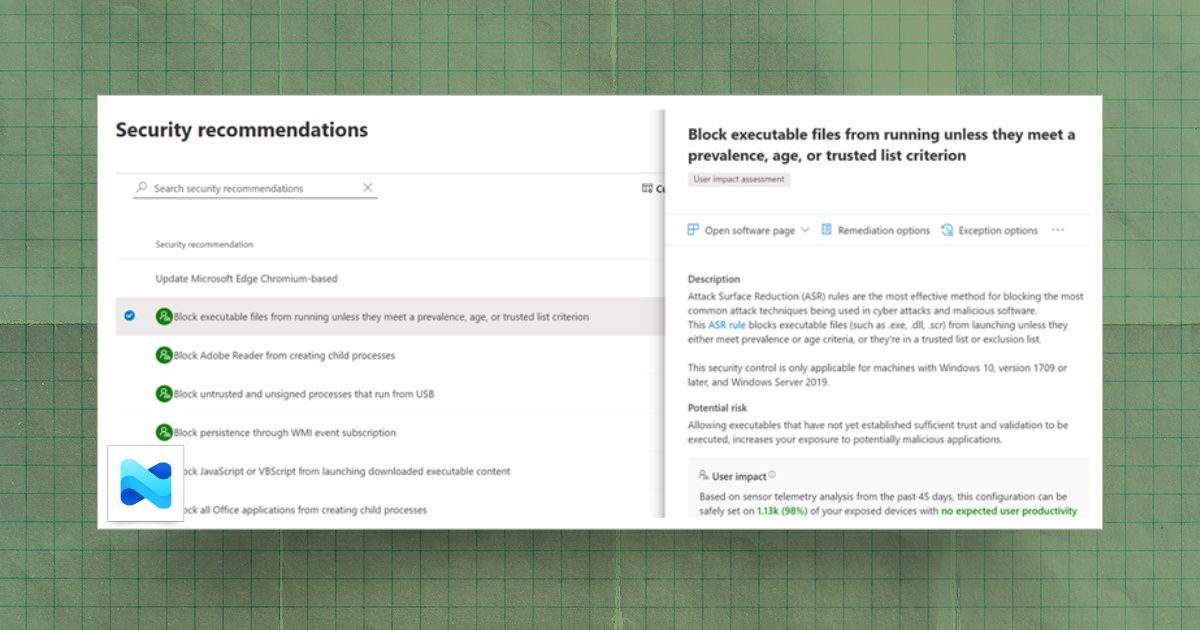

How to Configure Attack Surface Reduction Rules in Microsoft Intune

Step-by-step guide to configuring Attack Surface Reduction rules in Microsoft Intune, from prerequisites to deployment and ongoing monitoring.

Pete Constantine

Product

Control Monitoring

Microsoft

Endpoint Security

Platform

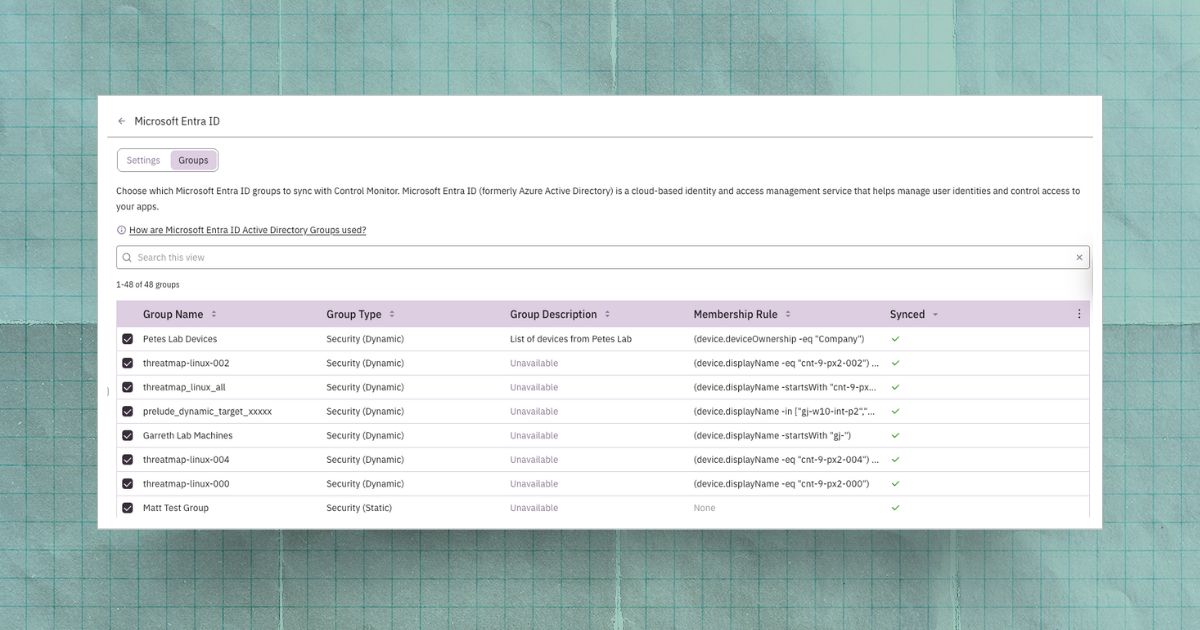

Leveraging Entra ID Groups in Prelude to Visualize Your Security Posture

Sync Entra ID groups with Prelude to visualize security posture by team, filter findings, spot drift, and prioritize remediation where risk is highest.

Joe Kaden

Product

Control Monitoring

Identity Security

Platform

Intel

How Intel Hardware Capabilities Enable Better Software Security

Discover how Intel's hardware-based security capabilities create stronger defenses than software-only approaches for modern business threats.

Pete Constantine

Product

Endpoint Security

Hardware Security

Email Security

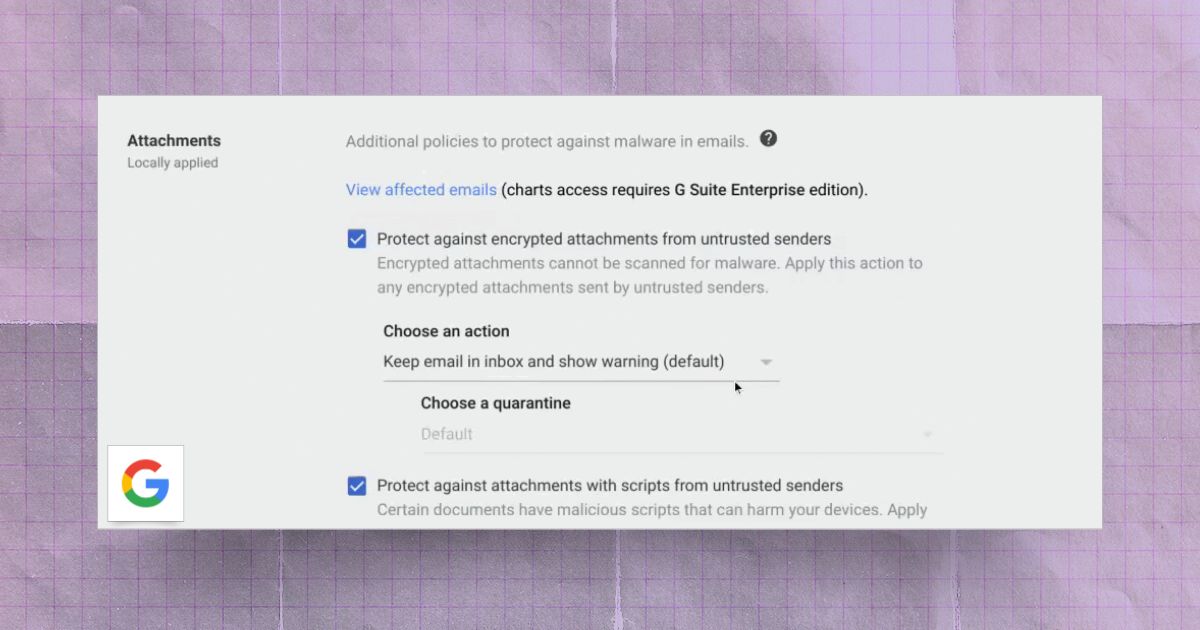

Hardening Google Workspace Email: Anti-Spoofing and Advanced Phishing Protections

Learn to configure Gmail's anti-spoofing protections, SPF/DKIM/DMARC authentication, and enhanced scanning to stop phishing attacks.

Chris Singlemann

Go-to-market

Control Monitoring

Google Workspace

Email Security

Identity Security

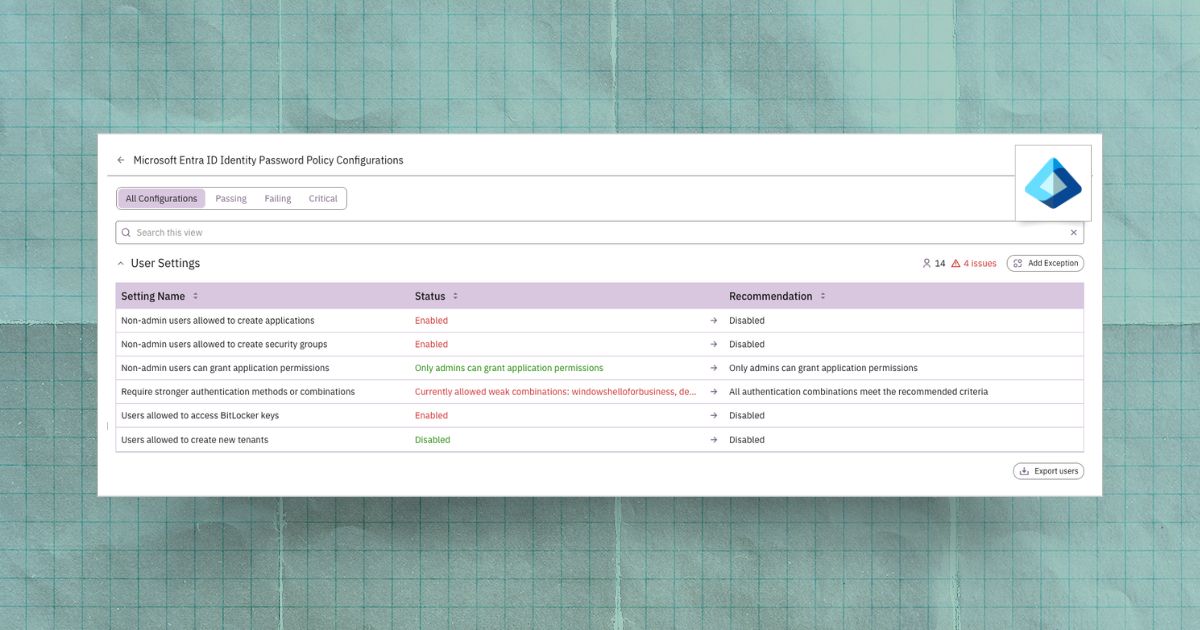

Configuring Entra ID to Prevent User-Created Apps and Security Groups

Learn how to disable risky Entra ID defaults that let users create apps and groups, preventing attacker persistence and shadow IT risks.

Joe Kaden

Product

Control Monitoring

Microsoft

Identity Security

See for yourself

Done with reading for the day? Try Prelude instead.

Put our insights to good use with a free trial of Prelude so you can validate the coverage, configuration, and efficacy of your security tools.

.jpg)

.jpg)

.jpg)

.jpg)

.jpg)